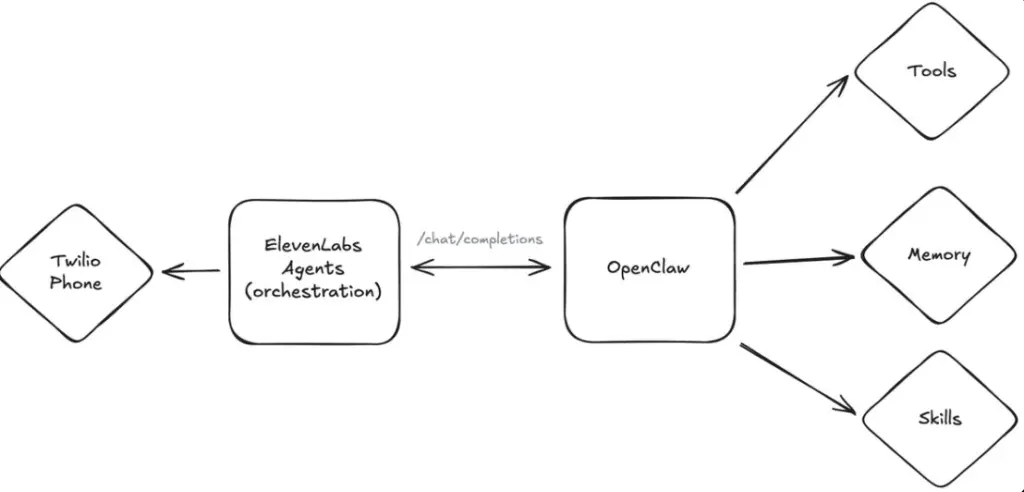

ElevenLabs announced new developer support that allows OpenClaw AI agents to be accessed by phone through its Agents platform.

The update enabled developers to call their OpenClaw bots and interact with them using real-time voice conversations.

According to ElevenLabs, the Agents platform handled voice-specific functions such as speech recognition, speech synthesis, turn-taking, and phone integration.

OpenClaw continued to manage reasoning, memory, tools, and skills. The two systems communicated through a standard OpenAI-compatible chat completions interface.

The setup allowed developers to expose their OpenClaw instance via a public endpoint and connect it to an ElevenLabs Agent configured with a custom large language model.

Once connected, the agent routed full conversational context to OpenClaw on each turn, enabling persistent memory and task tracking.

For phone-based access, developers could link a Twilio number to the ElevenLabs Agent.

After configuration, users could call the number and speak directly with their OpenClaw agent, including asking for updates, saving information, or receiving summaries while away from a keyboard.

The integration targeted developers building conversational AI systems that extended beyond text-based interfaces.

Image Credit: ElevenLabs

Why This Matters Today

You are seeing AI agents expand from chat interfaces into real-world communication channels.

Voice access removes friction for users who cannot rely on screens, such as while driving or multitasking.

By separating responsibilities between systems, ElevenLabs and OpenClaw illustrated a modular approach to agent design.

ElevenLabs focused on voice and telephony orchestration, while OpenClaw handled reasoning and execution. This reduced the complexity developers face when building fully conversational agents from scratch.

Phone-based AI agents also broadened use cases beyond customer support.

Developers can use them for personal assistants, monitoring long-running tasks, or retrieving contextual updates without manual input. Persistent context across voice sessions made these interactions more practical than traditional voice bots.

The use of standard APIs and third-party telephony services lowered barriers to adoption. Developers could reuse existing OpenClaw agents rather than redesigning workflows for voice.

As AI agents increasingly move into ambient and hands-free environments, integrations like this point toward a shift from chat-first AI to multimodal, always-available systems.

Our Key Takeaways:

ElevenLabs enabled phone-based access to OpenClaw AI agents through its Agents platform.

The integration splits voice orchestration from reasoning and tool execution. Developers can now call AI agents using standard telephony services.

The update highlighted growing momentum toward voice-native AI workflows.

- ElevenLabs added voice and phone support for OpenClaw AI agents.

- The system routed conversations through standard chat completion APIs with full context.

- Developers can connect agents to phone numbers for hands-free, real-time interaction.

You may also want to check out some of our other tech news updates.

Wanna know what’s trending online every day? Subscribe to Vavoza Insider to access the latest business and marketing insights, news, and trends daily with unmatched speed and conciseness. 🗞️