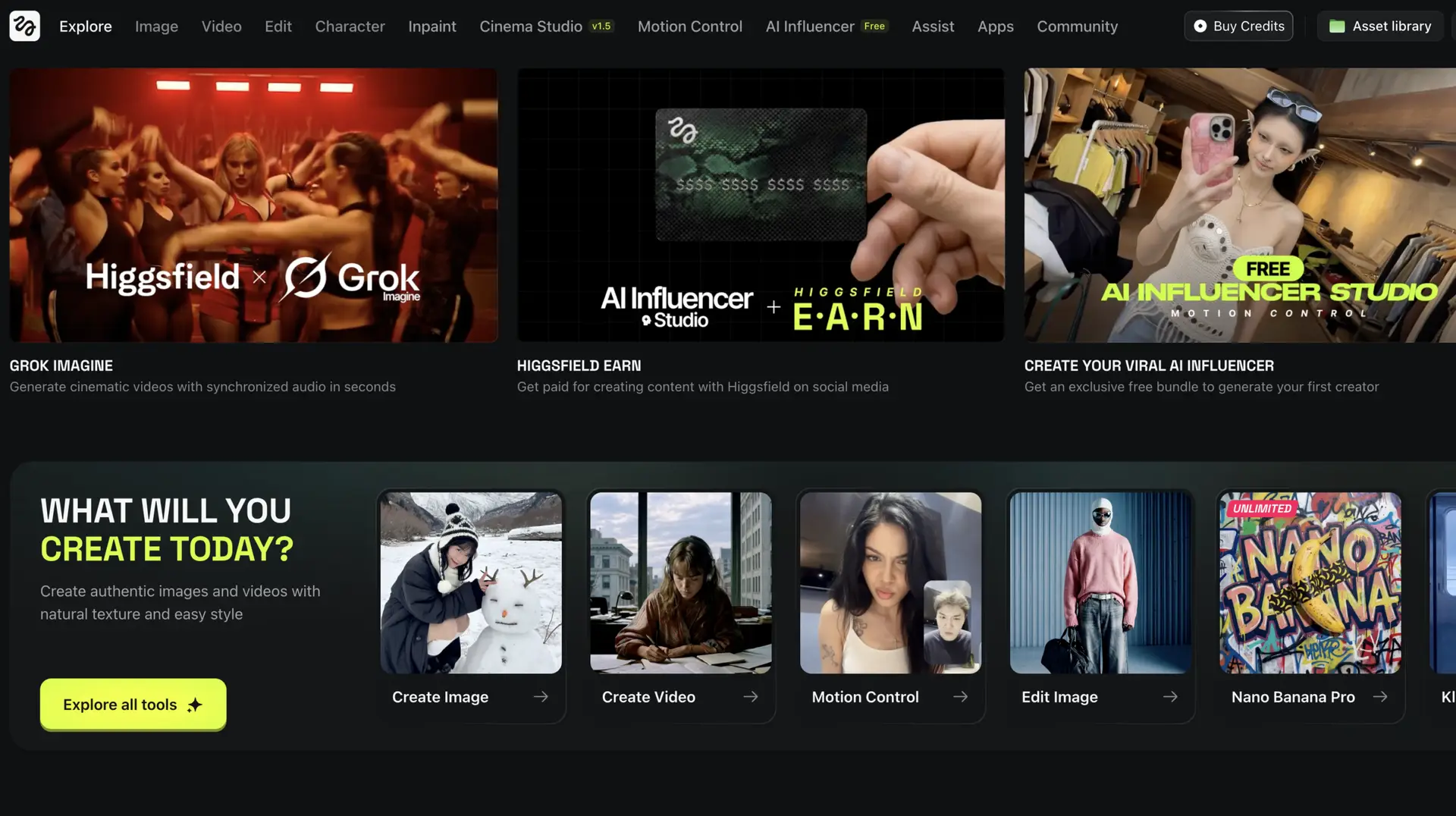

Higgsfield shared details of Vibe-Motion, an upcoming motion design feature for its AI video platform, ahead of a planned launch.

The company described the tool as a prompt-to-motion system built on Anthropic’s Claude reasoning engine, designed to give creators real-time control over motion design.

According to Higgsfield, Vibe-Motion generated curated motion design from a single prompt, then allowed users to fine-tune layout, timing, spacing, and transitions live on a canvas.

Unlike existing AI video tools that rely on static outputs, Claude reasoned through creative intent before rendering motion, translating direction into structured motion logic.

The company said the feature supported layering motion graphics on top of existing video, applying logos and brand kits, and maintaining consistency across revisions through persistent conversational context.

Claude was positioned as an “intelligent motion agent” that understood revisions and preserved design intent across iterations.

The company did not announce a specific release date but indicated that Vibe-Motion would launch soon as part of its broader Higgsfield platform.

Why This Matters Today

You are seeing rapid growth in AI video generation, but motion design has remained a weak point.

Most tools produce fixed outputs that require full regeneration for even minor changes, limiting their usefulness for professional workflows.

Higgsfield’s preview of Vibe-Motion pointed to a different approach.

By using a reasoning model to define motion logic before rendering, the company aimed to reduce trial-and-error prompting and make motion design more predictable.

Real-time adjustment addressed a long-standing gap between AI-generated video and traditional motion design tools.

The use of Claude also reflected a broader industry shift.

Large language models were increasingly being embedded as reasoning layers inside software, moving beyond text generation into decision-making and creative control.

In this case, Claude’s role extended to understanding design language, brand context, and stylistic references.

If the product performed as described, it could lower the cost and time required for motion-heavy content.

The upcoming launch would test whether creators preferred reasoning-driven control over the faster but less flexible generation tools already on the market.

Our Key Takeaways:

Higgsfield revealed Vibe-Motion as an upcoming motion design feature built around AI reasoning rather than static generation.

The tool was designed to interpret creative intent, generate motion logic, and allow live refinement without restarting workflows.

It positioned Claude as an intelligent agent inside the video editor rather than a background model. A launch timeline was not disclosed, but the company said the feature was coming soon.

- Higgsfield previewed Vibe-Motion, a Claude-powered tool for prompt-driven motion design with real-time control.

- The feature focused on reasoning, context retention, and deterministic motion behavior instead of one-off renders.

- The market response after launch will indicate whether reasoning-based motion tools can replace traditional workflows.

You may also want to check out some of our other tech news updates.

Wanna know what’s trending online every day? Subscribe to Vavoza Insider to access the latest business and marketing insights, news, and trends daily with unmatched speed and conciseness. 🗞️